In the past 10 months of coding, I’ve built up a number of tools and modules that navigate the data I have collected and ratings I have calculated. I had lofty ambitions of using these, and developing more, to provide a comprehensive preview of the upcoming season from the SOLDIER perspective.

It took a while to properly elucidate, but it was soon blindingly obvious that although such a prediction was possible, it would be pointless and inaccurate! The reason is easy to explain. The key outcomes I have chosen to focus on with the SOLDIER model thus far are on form-based predictions with the purpose of predicting upcoming games. The game-prediction model uses recent (5-game) and longer-term (20-game) form of player and team performances to predict an outcome using the players selected on the team sheets. Predicting 24 rounds ahead (plus finals) is a very long bow to draw when the ammunition is a set of darts.

The Problem

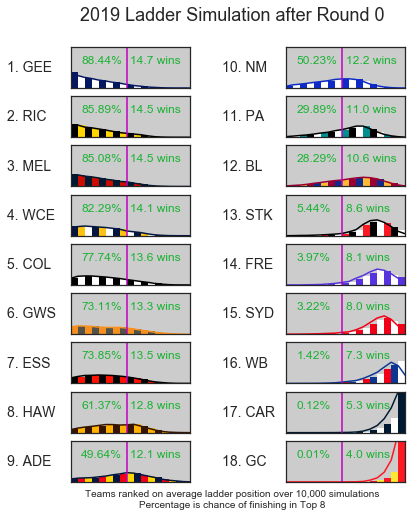

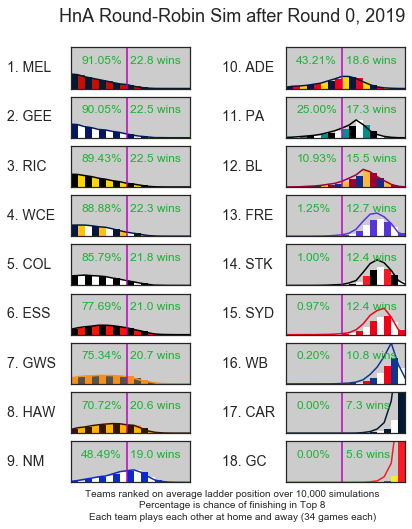

Later in the 2018 season, I started producing some weekly predictions simulating the rest of the season to establish end-of-season predictions based on current form. Naturally, my first port of call in predicting 2019 was to use this method to pit the teams against each other on both level playing fields (round-robin, each team playing each other home and away) to rate each team, and simulating the actual 2019 fixture as a more practical measure.

The results were surprising at first.

Wow, are Geelong that good? Are Sydney that bad? There’s a lot to unpack here, but something doesn’t quite add up. With such a broad prediction, the first sanity check for me is to compare to the bookies. After all, they’re the professionals in this caper. Just looking at the top 8 percentages, the ladder simulation is a lot more certain of things than the odds suggest*. Sydney, in particular, are about a 50% chance with the bookies to get into the top 8.

While this serves as a strong argument for the uselessness of such a long-range prediction, it serves as a reminder of the strengths and disadvantages of what I’m modelling. Furthermore, it provides direction for what could be done to improve such predictions in the future

The hurdles to overcome are plentiful if I were to predict a season with the model as it is:

- Which players will play each game?

- What effect does the off-season have?

- How do you account for natural evolution of players?

- Will a team’s game plan change?

- Will rule changes or “in-vogue” tactics change what statistical measures win games?

*mainly because the player/team form distribution is fixed in the above simulation rather than using a Brownian-motion inspired model

1. Which players will play each game?

The SOLDIER model encompasses player statistics and form. The chosen players for each team have a small but noticeable effect on the predicted outcome of the game. For the above simulations I used the “first choice team” as opined on afl.com.au for each club, and adjusted it for known injuries. But of course, no team is going unchanged all season; injuries play a part, younger players get tried out, and selection can be based on the opposition. A more sensible way would be to look at a squad (say, of 30) and average out to 22 players, which would be fairly easy to do.

2. What effect does the off-season have?

It’s simple to argue that form will not necessarily carry over the off-season. There are too many immeasurables and unknowables to look at the individual off-season and pre-seasons of all 500+ players and adjust “form” accordingly. Are there any rules of thumb? To probe this question, I browsed player data for the last five home-and-away rounds of 2017, and the first five home-and-away rounds of 2018, and looked at the difference of each players’ average performances in these two periods. While there were mostly drops in stats across the board going to a new season, it was not statistically significant. As an easily relatable example, out of the 293 qualifying players for this study, the players scored on average -0.5 less Supercoach points after the season break (p=0.7)*.

A further thought was probed that less- and more-experienced players may be affected differently by the summer break. Further splitting the already-filtered data into players with less than 50 games at end of 2017 (N=92) and more than 200 games (N=28) also proved fruitless with no statistically significant differences across the break. There’s more that could possibly be looked at here but I strongly suspect little progress.

*This isn’t the best measure to use here as Supercoach points are scaled per game, but the p-values are similar for other unscaled measures.

3. How do you account for the natural evolution of players?

Sam Walsh will definitely play this year, barring tragedy. So, how does one predict what Sam Walsh will produce this year? There is no data on how he performs against other AFL-quality teams in games for premiership points. How could I handle him and every other rookie that may or may not play this year?

Currently, if a debutante is playing the model will assign the player’s performance to be a plain old average of every first-game player’s historical outputs, regardless of the draft pick, playing role, team, etc. By the player’s second game, and subsequent games, it uses their personal recorded data. This is a decent trade-off for simplicity in hanging debutantes on a week-by-week basis, but does not hold for long-term predictions.

HPNFooty have done some magnificent work with player-value projection based on analysing a current player’s output and comparing with other players on a similar trajectory, discounting for other factors such as player age. On a slightly different arc, The Arc has used clustering algorithms to classify players into particular roles. By implementing similar concepts and merging the two together, it could be possible to (manually) assign a debutante a playing role (say, key defender or small forward) to project a more meaningful prediction for a season ahead.

Other thoughts this question brought up is how to handle players undergoing positional change (i.e. James Sicily, Tom McDonald) and old fogies put out to stud in the forward line (GAJ), but these are more one-offs that are probably not worth trying to manually override.

4. Will a team’s game plan change?

While player performance is a focus of this model, as important (if not more so) are the team measures that input into the predictions. Each team itself gets a rating in 6/7 SOLDIER categories based on team form. These measures incorporate team-aggregate statistics that cannot be allocated to individual players (say, tackles per opposition contested possessions). These could, conceivably, be a function of both team performance as a whole and the team’s game plan.

How well does this team form carry over to a new season? Do big personnel changes effect a noticeable change in a team’s output? These are questions I planned to have answered before this moment but they’ll have to wait.

5. Will rule changes affect game balance?

The AFL is an evolving competition, there are very frequent rule changes that never really allow the game to settle to a point where all strategies with a given set of rules are explored. Having said that, assuming the player and team performances are projected as well as possible over a whole season, will the model’s prediction be accurate when the effect of rule changes is unknown?

The fit of the model is updated every round when there is fresh data. It compares the player and team SOLDIER scores, as calculated from the published statistics, and fits them against the game results. More recent games are weighted more strongly to reflect the prevailing style of football – and what combinations and strategies beat what combinations and strategies. Significantly better results have been obtained using this approach rather than using all historic data equally weighted to fit the model.

The effects the new 2019 rules will have is very much unknown, and the response in tactics from teams will naturally evolve over the season.

Conclusion

I had planned on presenting more data to back up the above points but time got the better of me and hopefully I’ll expand on this throughout the season.

Without a number of pending and unplanned improvement to my processes, a long-term prediction covering a whole season is not going to be purposefully indicative of reality. Sure, Geelong could top the ladder, but for the above reasons I wouldn’t bank on it!